By Dale Vile

To some, the mere concept of enterprise datacentres still being around in ten years’ time is anathema. By then, it is asserted, all enterprise IT should be running in public clouds. The only people who can’t see this are insecure box-huggers frightened for their jobs, and IT dinosaurs with no imagination.

For the remainder of this discussion, however, we’re going to make the assumption that the world’s IT won’t be under the total control of cloud providers such as Amazon, Microsoft, Google and Salesforce.com in 2023. That’s not to say we won’t be using more cloud services, we undoubtedly will, but infrastructure running on premise will probably still be the centre of gravity for IT in the majority of medium and large organisations.

So does that mean datacentres will remain as they are? Almost certainly not, if for no other reason than natural refresh cycles will bring with them new technology and new ways of doing things, even if you have no plan to change things proactively.

Best to think ahead

If things are going to be evolving anyway, though, it makes sense to think ahead and make sure that changes are introduced in a coordinated and optimum manner. If you are dubious about the value of doing this, just take a look back over the last ten years.

While most IT departments have seen x86 server virtualisation enter their worlds, the ones that planned and managed its adoption in a considered manner are in a much better state today than those who just let things happen. If you took a proactive approach, you are probably enjoying the advantages of a more coherent, manageable and cost-effective environment. If you adopted server virtualisation in more of an opportunistic or ad hoc way, there’s a good chance you are battling with virtual server sprawl and networking and storage bottlenecks, while still being a slave to a lot of tedious and error-prone manual administration that others have eradicated.

If you look across the data centre computing world, you’ll find lots of other examples where new technology has had little or no impact, or even a negative impact, because of uncontrolled adoption: Unmanaged SharePoint installations leading to document and information sprawl, tactical data warehouse initiatives creating yet more disjoints and integration headaches, ill-planned unified communications implementations running into quality of service issues, etc.

Get it right, though, and it’s possible to move the game forward with every round of change and investment driving towards better efficiency, improved service levels, greater flexibility and, not least, an easier life for IT managers and professionals.

A forward looking approach also means that investments today are more likely to be laying a firm foundation for the future. Those who took a structured and managed approach to server virtualisation, for example, now find themselves in good position to start looking at advanced workload management and orchestration (a.k.a. private cloud).

However, it’s not just infrastructure and management technology that’s evolving; the needs and expectations of users and business stakeholders are too. It’s a bit of a cliché, but it really is true that IT departments are generally being asked to deliver more for less each year, and at a faster pace. It’s therefore worth taking a minute to consider some of the specifics here.

Inescapable pressures and demands

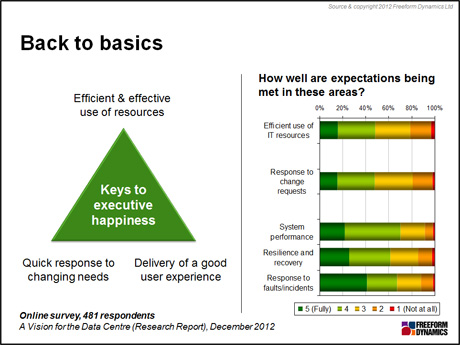

At the highest level, it’s possible to break out business level expectations of data centre computing into three main areas:

- 1. Efficient and effective use of resources (assets, people, external services, etc.)

- 2. Quick response to changing needs (new requirements, additional capacity, etc.)

- 3. Delivery of a good user experience (systems performance, availability, etc.)

These are summarised on the following figure, which also shows (based on a recent reader study) that IT departments are often perceived to fall short in these areas:

And things aren’t going to be getting any easier. Continuous change and the increasing pace of change at a business level comes out strongly when we interview senior business managers, as does the degree to which business processes are becoming ever more dependent on IT through greater automation of business operations and direct interaction with customers and trading partners over the internet.

Meanwhile, with everyone from management consultants, through investment analysts, to politicians and the mainstream media talking about cloud computing, the ‘C’ word has now made it into executive vocabulary. Even though business people often can’t articulate the significance of cloud computing in any precise, accurate or meaningful way, it’s entry onto the scene has pushed the question of IT sourcing further up the business agenda.

The hosted cloud opportunity and challenge

Over the past few years, we have seen an explosion in the number and variety of cloud services available on the market, from basic hosted infrastructure at one end, to full-blown business applications at the other. The promises that typically accompany these services are now widely known – no up-front costs, fast access to new capability, less IT infrastructure to worry about, increased ongoing flexibility, and so on.

Less well publicised are the challenges that can arise when you start to make broader and more extensive use of cloud. As the number and type of services consumed proliferates, ensuring adequate integration between offerings from different providers, and between critical cloud services and internal systems, can become a problem. Related to this, end-to-end service level assurance often becomes more difficult, as does troubleshooting across systems and services boundaries, protecting information, assuring compliance, and, not least, monitoring and controlling costs.

To be clear, most of the problems are not to do with individual cloud services themselves (assuming you do your due diligence on providers), it’s more about making sure everything works together safely and cost effectively. And in this respect, the ease with which end user departments, workgroups and even individual employees can adopt cloud services, while never thinking about integration, interoperability or information related requirements, already represents a challenge for some organisations.

Dale is a co-founder of Freeform Dynamics, and today runs the company. As part of this, he oversees the organisation’s industry coverage and research agenda, which tracks technology trends and developments, along with IT-related buying behaviour among mainstream enterprises, SMBs and public sector organisations.

Have You Read This?

From Barcode Scanning to Smart Data Capture

Beyond the Barcode: Smart Data Capture

The Evolving Role of Converged Infrastructure in Modern IT

Evaluating the Potential of Hyper-Converged Storage

Kubernetes as an enterprise multi-cloud enabler

A CX perspective on the Contact Centre

Automation of SAP Master Data Management

Tackling the software skills crunch