The cost of supplying IT services inside businesses has never been more visible with considerable marketing attention asking ‘why aren’t you using Cloud based services rather than running your own systems?’ This is putting even more pressure on IT departments to justify their budgets and to show they are doing a good job. Just how will financing and budget models need to change in the coming years as business pressure on IT services ramps ever higher?

History and the current situation

For most of the past two or three decades the bulk of major IT infrastructure spend, certainly on servers and storage, has been directed at discrete new or upgraded applications. This typically resulted in datacentres and computer rooms filling up with large numbers of servers, each with its own storage system, operating in isolation, usually running a single piece of business software.

Even as IT technology has developed to allow servers to run multiple applications, and storage platforms to be virtualised and shared, many organisations have continued to operate their computer systems as an inefficient series of islands. In addition to the straightjacket created by narrowly defined budgets, it is also common for line of business managers to be reluctant to allow server and storage hardware allocated to their department or division to be “shared” with their peers.

The net result is that many organisations are today unable to fully optimise their physical resources and operate anywhere close to full capacity. The inconsistent systems landscape is then difficult to manage, with extensive reliance on people-intensive administration, and little automation of routine processes. Needless to say, the associated IT procurement tends to be complex and time consuming.

The drivers initiating change

Most datacentres have been designed to deliver IT services that could be expected to run for several years. This coupled with stringent change controls and testing, often creates situations in which the systems installed have been scoped in terms of scale and performance before much “real” service experience has been gathered. As a consequence many, if not most, operational systems have spare capacity designed in from day one – i.e. over-provisioning has been used to provide headroom for growth.

Today, however, business needs may change very rapidly placing great pressure on IT to respond to new requests on very short timescales with limited time to plan. Beyond this, organisations are now operating under financial conditions and external oversight that makes it very difficult to spend money on resources that may not even be utilised for months, or even years.

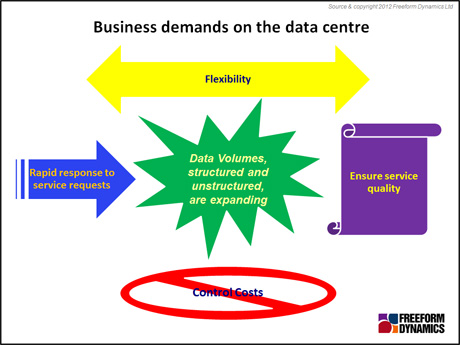

Figure 1 below summarises the major drivers reported as important in the on-going evolution of IT service provision and data centre modernisation.

Figure 1

Datacentre technology impacts

There is little doubt that the needs of modern businesses for IT to deliver services rapidly to an expanding range of devices and users will result in fundamental alterations to the ways in which solutions are procured and operated. The scope of these changes will, over time, expand to include the core technologies utilised inside the datacentre, and the management tools employed to keep things operational. Let’s have a look in more detail at some of the specifics.

Resource Optimisation – x86 / x64 Servers

The systematic overprovisioning of IT systems and the inevitable underutilisation that results has been one of the main drivers behind x86 server virtualisation. Furthermore, many are now recognising the value of creating ‘pools’ of resources that can operate much closer to their potential capacity than has traditionally been achieved. So called ‘private cloud’ architectures are relevant here.

The virtualisation of servers has already lead to many organisations changing the way they procure systems, especially in terms of the size and expandability of platforms. Whilst it is fair to say that many of the early server virtualisation projects were based on their ability to optimise hardware and software acquisition costs, virtualisation can also lead to many other benefits, especially in terms of increasing systems and service resilience. Such systems often also deliver, though this is harder to quantify, lower operational costs via reduced power consumption, higher staff productivity and decreased software licensing costs.

The fly in the ointment, however, is that obtaining investment for ‘shared infrastructure’ can pose significant challenges. Put simply, it takes a lot more people to say ‘yes’ when the proposal on the table is for something that will become a ‘corporate’ asset rather than something that will be owned and accounted for within a single department, division or cost centre

Storage

Organisations are faced with the considerable challenge of storing ever greater volumes of data and providing access to it from an expanding portfolio of devices, 24/7. As with the server infrastructure, until recently most storage was acquired to support a specific business requirement or application. This has resulted in data centres housing distinct islands of frequently underused storage systems.

To address both the rapid growth of storage and to reduce the rising cost of holding data, organisations are increasingly looking to storage virtualisation and tools that throttle storage growth (such as data deduplication, archiving and storage tiering).

But, once again, procuring storage capacity that is shared amongst various user groups will challenge typical project/cost-centre based acquisition founding models.

Software

As the IT infrastructure becomes more flexible through the use of server and storage virtualisation it is obvious that software licensing models will have to evolve if maximum business value is to be delivered. This will cause many headaches as IT professionals and vendors search for licensing terms and conditions able to cater for rapid growth and contraction of software usage.

Some interested parties have promoted “pay per use” models as an obvious solution whereby the organisation pays for its software usage in line with consumption, but this approach is notoriously difficult to budget for. With the vast majority of businesses operating on carefully planned budgets fixed in advance, variable charging is difficult to manage except in limited circumstances.

There is little doubt that the already complex world of software licence management, with its often unwieldy terms and conditions and its associated charges, will become even more difficult to optimise in the years ahead. But it is an area in which there is great scope for cost reductions as very few organisations use the most cost effective software licences available, or indeed have anything like an accurate picture of the software they have deployed, its usage and what support / maintenance charges are being incurred for software that is no longer being utilised.

Systems Integration / standards

Procuring servers, storage, networking and software that is able to be modified rapidly as end user requirements change is already causing some organisations to investigate different ways of acquiring IT resources. And with systems integration and optimisation always being an area in which IT professionals expend considerable efforts, the question arises of is it better to try and build systems from distinct pools of servers, storage and networking, or is it better / easier / faster to buy pre-configured solutions whereby all elements are already assembled in the box?

The advantages of acquiring pre-built solutions such as VCE, IBM PureSystems, HP Converged Infrastructure, Dell Converged Infrastructure or those available from other major suppliers is that a lot of basic interconnect challenges are removed. More importantly, such systems usually come with management tools designed to allow all aspects of the system to be administered from a single console, potentially by a team consisting of IT generalists rather than storage, server and networking specialists.

The issue for some potential users is that such solutions are relatively large and are designed to support multiple workloads. This can, once again, make procuring such systems using project based funding difficult.